ChatGPT to Computer Vision: Artificial intelligence and its various algorithms have generated a lot of hype.

Everyone knows that some computer algorithms combined with machinery can work miracles in our workplaces, whether they be at home or in our businesses.

One must understand the primary drivers of many technological advancements and advances, such as the recent development of the notion of “deep learning.”

Deep-learning transformers are a novel technology that’s accelerating the evolution of artificial intelligence (AI). These sophisticated models require a lot of data and processing power, which allows them to perform well at a variety of jobs.

The capacity of deep-learning transformers to comprehend and interpret human language initially brought them notoriety.

They are currently creating a lot of buzz in the world of computer vision, which signifies a big change in the way people use them and why they matter. This modification demonstrates the increasing effect and versatility of these models.

According to Fortune Business Insights, The global deep learning (DL) market is expected to increase at a compound annual growth rate (CAGR) of 36.7% from 2024 to 2032, from a value of USD 17.60 billion in 2023 to USD 24.53 billion in 2024.

In this blog post, we will delve into the world of deep-learning transformers and explore how they are shaping our world, particularly in the context of computer vision.

We will trace the journey from ChatGPT, a renowned language model, to the integration of deep-learning transformers in processing visual data.

By understanding the potential of these transformative technologies, we can gain insights into the advancements driving AI forward.

Introduction to Transformers

Transformers are neural networks that use sequential data analysis to acquire understanding and context.

The Transformer models employ a collection of cutting-edge mathematical approaches called attention or self-attention.

This collection aids in determining the mutual influences and dependencies between remote data items.

Transformers is one of the most sophisticated models ever created, as demonstrated in a 2017 Google paper.

The result of this is a new wave of machine learning advancements known as “Transformer AI”.

Due to their ability to process sequential data well, transformers are a type of deep-learning architecture that has changed many different fields.

Since they were first used, natural language processing (NLP) tasks and transformers have been used in speech recognition, computer vision, and other fields.

Transformers use attention processes to capture dependencies between all sequence elements at once, in contrast to standard recurrent neural networks (RNNs) that process sequential data in a step-by-step fashion.

Transformers can handle long-range dependencies more skillfully thanks to this parallel processing, which enhances efficiency in challenging operations.

Understanding Deep Learning & Deep Learning Transformers

Deep Learning

Deep learning is a subset of artificial intelligence (AI) and machine learning that mimics how people study specific subjects.

It is possible to train deep learning models to carry out classification tasks and identify patterns in a variety of data, including text, audio, images, and more.

It is also utilized for the automation of tasks like image description and audio transcription that would typically require human intellect.

Predictive modeling, statistics, and deep learning are all crucial components of data science.

Deep learning facilitates the faster and easier collection, analysis, and interpretation of massive volumes of data, which is highly advantageous for data scientists.

According to the deep learning principle, a computer can learn to function based on direct data feeds like text, images, and sounds.

These models can produce extremely precise outcomes, occasionally even more effectively and efficiently than humans.

Deep learning models make use of enormous datasets, which demand a lot of processing power.

They achieve accuracy by employing neural networks, which have numerous layers similar to those found in the human brain.

Deep Learning Transformers

Artificial neural networks are used by a branch of AI known as “deep learning” to help robots learn from data and make wise judgments.

This deep-learning paradigm serves as the basis for deep-learning transformers, like OpenAI’s ChatGPT, which are strengthened by the transformative potential of transformers.

Transformers have been successful in collecting complex patterns and dependencies within data thanks to their attention mechanisms and self-attention layers, enhancing the power of deep-learning models.

Deep-learning transformers have an impact that goes beyond the confines of particular applications and domains.

These models have proven themselves adept at machine translation, recommendation systems, and natural language processing.

Their incorporation into computer vision processing has, however, created a whole new set of opportunities.

Transformers’ focus system is derived from the encoder-decoder architecture included in RNNs. Sequence-to-sequence (seq2seq) tasks can be handled by it, and the sequential component can be eliminated.

A Transformer can process data more quickly and with higher parallelization than an RNN because it doesn’t process it in sequential order.

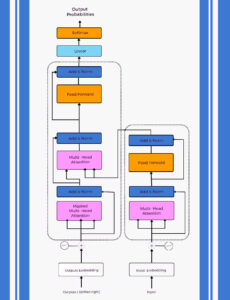

General architecture of the Transformer deep learning model

This figure illustrates the Transformer deep learning model’s overall architecture.

There are two main components of the Transformer:

The encoder stacks — Nx identical encoder layers (in the original published paper, Nx = 6).

The decoder stacks — Nx identical decoders layers (in the original published paper, Nx =6)

To use the sequence order, an additional layer of positional encoding is layered between the encoder and decoder stacks in models that lack recurrences or convolutions.

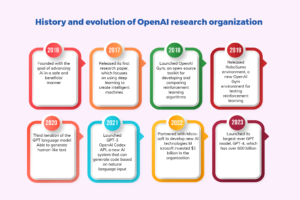

Evolution of OpenAI

OpenAI is a groundbreaking initiative that has influenced the course of artificial intelligence research and development in the rapidly changing field of artificial intelligence.

Tracing the history of OpenAI from its founding to its present position offers an engrossing story of invention, difficulties, and discoveries that have expanded the bounds of machine intelligence.

With the ambitious goal of ensuring that artificial general intelligence (AGI) benefits all people, OpenAI was founded.

Examining the historical fabric of OpenAI’s development, we see the organization’s dedication to innovative research, moral issues, and cooperative alliances that have helped it rise to prominence in the AI world.

The history of the OpenAI artificial intelligence research group is depicted in the figure below.

This image aims to describe how OpenAI has developed over the previous few years, including the introduction of OpenAI gym, the OpenAI Codex API, the various iterations of the Generative Pre Trained Transformer (GPT) model, etc. to raise your presentation level.

Introducing the History And Evolution Of OpenAI ChatGPT Integration Into Web Applications.

Overview of ChatGPT

A sophisticated language model built on the GPT architecture, ChatGPT was created by OpenAI. To process and produce text responses that resemble those of a human, it uses deep learning transformers.

ChatGPT has undergone numerous revisions to enhance its efficiency and capabilities after being initially trained on a sizable corpus of internet text.

ChatGPT is made to comprehend user inquiries and produce logical, contextually appropriate answers. It is capable of conversing, responding to queries, giving explanations, and even simulating human-like dialogue.

ChatGPT has acquired amazing fluency and versatility in creating text over a wide range of themes thanks to its extensive pre-training and fine-tuning.

Overview of ChatGPT architecture and training process

How ChatGPT Transformed Natural Language Processing Tasks

The introduction of ChatGPT has significantly impacted the field of natural language processing (NLP) by pushing the boundaries of what is possible in terms of language understanding and generation.

Some key aspects of its evolution are:

- Enhanced Contextual Understanding: To gather and analyze complicated contextual data, ChatGPT uses deep-learning transformers. It can evaluate user requests in light of earlier conversational tangents, resulting in more logical and contextually aware responses.

- Improved Coherence and Coherency: ChatGPT’s training on massive amounts of diverse text data enables it to generate more coherent and contextually appropriate responses. It can understand and maintain conversational context, ensuring a smoother and more natural dialogue experience.

- Expanded Vocabulary and Knowledge Base: Through its training, ChatGPT has acquired knowledge from various domains, enabling it to provide informative responses to a wide range of topics. Its expansive vocabulary and ability to generate diverse and relevant responses make it a valuable resource for users seeking information.

- Handling Ambiguity and Clarification: ChatGPT has undergone iterations to improve its ability to handle ambiguous queries and seek clarifications when user input is unclear. It can now ask follow-up questions about disambiguation, enhancing the accuracy and relevance of its responses.

Successful applications of ChatGPT

ChatGPT has found utility in various applications and domains, showcasing its versatility and potential impact:

Customer Support:

ChatGPT can be used as a chatbot or virtual assistant to offer individualized client care. It can respond to routine inquiries, address problems, and even refer complex situations to human agents.

A customer support chatbot can leverage linked databases to find the answers to more difficult issues and can react to various requests automatically.

Content Generation:

ChatGPT can help with content development by offering concepts, recommendations, and even producing drafts of new material. It has been used to write product descriptions, essays, articles, and more.

Language Tutoring:

With its ability to explain concepts and provide examples, ChatGPT can aid in language learning and tutoring. It can assist learners by answering questions, providing explanations, and engaging in practice conversations.

Personalized Recommendations:

ChatGPT can understand user preferences and offer personalized recommendations. It can suggest books, movies, and products, or even provide travel advice based on user preferences and requirements.

Creative Writing and Storytelling:

ChatGPT’s ability to generate coherent and imaginative text has been utilized for creative writing and storytelling applications.

It can help with brainstorming ideas, plot development, and even generating dialogue for fictional characters.

Healthcare

Personalized treatment programs for patients who live far away can be provided with the use of ChatGPT.

In the telemedicine era, ChaGPT could instantly prescribe medication and communicate in natural language with patients who were far away.

Additionally, the model can give physicians prompt and dependable assistance because it can identify potentially harmful medications, recommend treatments for specific patient conditions, and provide instructions for handling complicated medical situations.

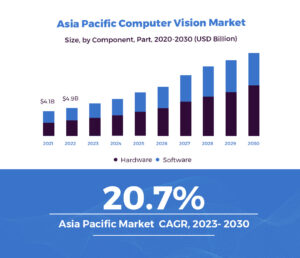

Introduction to Computer Vision Processing

The goal of the artificial intelligence discipline of computer vision processing is to make it possible for computers to comprehend and interpret visual data similarly to humans.

It entails the creation of algorithms and models that enable robots to draw valuable conclusions and information from photos and movies.

Due to its numerous uses in numerous industries, computer vision processing has become increasingly important in recent years.

Computer vision processing has transformed industries like healthcare, autonomous vehicles, surveillance, retail, and entertainment by allowing robots to “see” and analyze visual input.

In a wide range of fields, it has created new opportunities for automation, efficiency, and improved decision-making.

According to Grand View Research, The global computer vision market size was valued at USD 14.10 billion in 2022 and is expected to grow at a compound annual growth rate (CAGR) of 19.6% from 2023 to 2030.

Deep-Learning Transformers in Computer Vision

In computer vision processing, deep-learning transformers, a potent type of neural network model, have changed the game.

These models process and interpret visual input with astounding precision and efficiency by using deep learning methods, particularly transformer structures.

Object identification, picture classification, and image synthesis tasks all require the ability to capture spatial relationships, context, and long-range dependencies inside images, which is where deep-learning transformers shine.

Deep-learning transformers, in contrast to conventional computer vision techniques, learn these representations automatically from input, making them more versatile and capable of handling a variety of visual tasks.

Advantages and Challenges of Using Deep-Learning Transformers in Computer Vision

Advantages

- Improved Accuracy: Deep-learning transformers have demonstrated state-of-the-art performance in various computer vision tasks, surpassing the capabilities of previous methods.

- End-to-end Learning: Unlike traditional computer vision pipelines, deep-learning transformers can learn end-to-end from raw visual data, eliminating the need for manual feature engineering.

- Transfer Learning: Deep-learning transformers can leverage pre-trained models on large-scale datasets, enabling faster and more efficient development of computer vision applications.

- Adaptability: Deep-learning transformers can handle diverse visual inputs, including images, videos, and even 3D data, making them versatile for various applications.

Challenges

- Data Requirements: Deep-learning transformers require large amounts of labeled data for training, which can be a challenge in domains where annotated datasets are scarce or expensive to acquire.

- Computational Resources: Training deep-learning transformers can be computationally intensive and require a robust hardware infrastructure, limiting their accessibility for some developers and researchers.

- Interpretability: Deep-learning transformers are often considered “black-box” models, making it challenging to interpret and understand the reasoning behind their predictions, raising ethical and transparency concerns.

- Robustness and Generalization: Ensuring deep-learning transformers generalize well to unseen data and are robust to variations in lighting, scale, and viewpoint remains an ongoing challenge in computer vision.

Applications of Deep-Learning Transformers in Computer Vision

Deep-learning transformers have been applied to several crucial computer vision tasks, transforming the field in numerous ways:

- Object detection and recognition: Deep-learning transformers can accurately detect and classify objects in images and videos, enabling applications like autonomous driving, surveillance, and robotics.

- Image classification and segmentation: By learning high-level features and capturing fine-grained details, deep-learning transformers have significantly improved image classification and semantic segmentation tasks.

- Image generation and style transfer: Deep-learning transformers can generate realistic and high-quality images. They can also transfer artistic styles from one image to another, enabling creative applications.

- Video analysis and understanding: Deep-learning transformers have been instrumental in analyzing and understanding video content, facilitating tasks such as action recognition, video captioning, and video surveillance.

Impact of deep-learning transformers on computer vision

The impact of deep-learning transformers on computer vision can be observed through various real-world case studies:

Deep-learning transformers in autonomous vehicles

Self-driving cars depend heavily on computer vision models powered by deep-learning transformers to effectively see and understand their environment.

Long-range dependencies and contextual data can be captured by deep learning transformers, which is useful for understanding complicated environments in the context of autonomous driving.

Medical imaging and diagnostics

Deep-learning transformers have shown promising results in medical imaging, aiding in the detection and diagnosis of diseases from X-ray, MRI, and CT scan images.

Surveillance and security applications

By enabling sophisticated object recognition, tracking, and anomaly detection, deep-learning transformers improve surveillance systems and increase public safety and security.

Conclusion

Beyond natural language processing, deep-learning transformers have developed into potent computer vision technologies.

Deep-learning transformers have shaped our world from their inception in models like ChatGPT to their use in several computer vision tasks.

To ensure the ethical and advantageous use of these revolutionary technologies, it is necessary to address ethical issues and overcome obstacles.

Future deep-learning transformer developments and computer vision applications have enormous potential.